Why Testing and Debugging Are Worth Your Time

Testing feels like it slows you down. It doesn't. It's the reason some teams ship fast and others don't.

Why Testing and Debugging Are Worth Your Time

There’s a bug in production. It’s 2am. Your phone is buzzing. You’re staring at code you wrote six months ago, trying to figure out what went wrong and why it’s only happening for users in a specific timezone with a particular account type.

This bug would have taken five minutes to catch with a test. Instead, it’s taking five hours to diagnose, plus the customer trust you’re burning while the system is broken.

This is the real cost of skipping tests. Not the time you “saved” by not writing them, but the time you’ll spend later, multiplied, when things go wrong in the worst possible way.

The Cost Curve Nobody Talks About

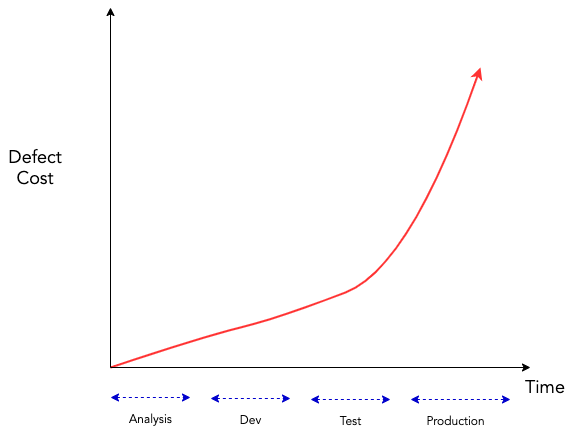

Barry Boehm’s research in software engineering economics revealed something that should change how every team thinks about quality: the cost to fix a defect increases exponentially the later you find it.1

A bug caught while you’re writing the code? Minutes to fix. The same bug caught in QA? Hours. In production? Days, plus incident response, plus customer communication, plus the fixes to the fixes.

This insight gave birth to the “Shift Left” approach, which advocates for earlier and more frequent testing in the development lifecycle. The idea is simple: if bugs are cheaper to fix early, find them early. Prioritizing testing from the start leads to fewer bugs in production and more predictable delivery timelines.

Microsoft and IBM validated this specifically for unit testing. Their research revealed a significant drop in defect rates in projects where developers wrote and maintained unit tests, compared to those that didn’t. These studies also indicated that unit testing resulted in more stable software and increased developer productivity.2 Fewer bugs reported by users meant less time fighting fires and more time building features.

More recent developments in software methodology reinforce this. Continuous Integration and Continuous Deployment (CI/CD) practices highlight the critical role of ongoing testing and debugging. These practices lead to faster feature development, improved code quality, and an overall more agile and responsive development cycle.3

This is the core insight: testing isn’t overhead that slows you down. It’s what makes sustained speed possible.

What Tests Actually Do

Tests validate that your code works as designed. But more importantly, they serve as a safety net that catches errors before users do.

Unit tests, the foundation of any testing strategy, focus on the smallest pieces of your system: individual functions and methods. They do three things:

They verify components work correctly. By testing small, isolated pieces, you ensure each part functions as expected. This forces modular design, which makes your codebase more maintainable and understandable. It reduces interdependencies, making it easier to identify, isolate, and fix issues without unintended side effects. Well-structured, modular code also makes it simpler to onboard new team members and allows for better parallelization of development work.

They validate how components interact. Beyond isolated units, tests confirm that different parts of your system interface correctly: call sequences, parameter passing, preconditions. This kind of testing ensures different components integrate seamlessly, reducing the likelihood of issues during more complex integration testing stages.

They catch edge cases. Extreme values, unexpected types, malicious inputs. These situations don’t happen often, but when they do, they cause the worst problems. By confirming that software handles these scenarios gracefully, not crashing or generating incorrect results, you’re fortifying your software against potential breakdowns and enhancing its resilience.

Debugging With Tests vs. Without

When a test fails, it points you to the exact location of the problem. You know what broke, you know what the expected behavior was, and you have a reproducible case to work with.

Without tests? You’re debugging in the dark. Something’s wrong somewhere. Users are reporting symptoms, not causes. You’re adding print statements, forming hypotheses, and hoping you find it before the next angry email.

By necessity, testing nudges you toward modular design, where software is composed of separate independent units. This principle makes your code more maintainable and easier to understand. When a test fails, you can isolate the issue to a particular module, making bugs quicker to diagnose and fix.

This is why developers often gain their deepest understanding of how their code actually works during testing. The creation of tests requires considerable creative problem-solving, a deep understanding of the software, and an ability to foresee potential issues from different angles. Writing tests forces you to think through what should happen, what could go wrong, and how components connect. That understanding makes everything else faster.

What This Looks Like in Practice

Say you’re adding a discount code feature to checkout. Without tests, you write the code, manually test the happy path, ship it.

Two weeks later, a customer reports they got charged full price even though the discount showed in the UI. You dig in. Turns out there’s a race condition between the discount validation and the payment processing. It only happens under specific timing conditions that your manual testing never hit.

Now you’re fixing a production bug, issuing refunds, and writing the tests you should have written in the first place.

With tests from the start, you’d have written a test for “discount is applied to final charge.” When you introduced the race condition, the test would have failed. You’d have fixed it in five minutes, not five days.

Same feature, same team. Radically different outcomes.

Helping Non-Technical Stakeholders Help You

Product managers and other stakeholders play a real role in testing quality. Their ability to bridge technical and business perspectives makes testing more effective.

Define clear acceptance criteria. Setting clear acceptance criteria forms the backbone of a project’s roadmap. These are conditions that software must meet to be accepted by users or stakeholders. It’s important to ensure they’re defined with clarity, as they set expectations and guide developers in their work.

Clear acceptance criteria should be specific and measurable. Instead of “the app should load quickly,” specify “the app should load within 3 seconds on a 4G connection.” This level of clarity guides development and testing teams to create more effective tests.

One of the most effective ways to write acceptance criteria is the Given-When-Then format, often used in Behavior Driven Development. Here’s how it works:

- Given provides the context. It describes the initial situation or precondition.

- When describes the key action, the event that triggers the behavior you want to test.

- Then defines the expected outcome or the observable changes due to the event.

For example:

- Given a user is logged in with items in their cart

- When they click checkout

- Then they should see those items on the checkout page

This structure makes requirements testable and understandable for everyone on the team, bridging the communication gap between technical and non-technical stakeholders.

Respect deep work time. Programming demands intense focus and periods of uninterrupted work. Balance the need for updates with respecting developers’ flow. Rather than relying heavily on meetings, consider asynchronous communication: project management tools, collaborative platforms, or scheduled email updates. When meetings are necessary, have clear agendas and actionable outcomes.

Understand the value. Comprehending the significance of testing is challenging for non-technical stakeholders, primarily due to the lack of direct, measurable business outcomes. But you can track bugs found in development vs. production, time spent on fixes, and issues reported after launch. These metrics provide insight into how a robust testing process impacts the product. By appreciating this value, stakeholders can better support development teams by allocating adequate resources and time for testing activities.

The Bottom Line

The most expensive code is the code you have to fix in production at 2am.

Testing and debugging feel like they slow you down because the cost is visible: you’re writing code that doesn’t ship features. But the alternative cost is invisible until it isn’t. The 3am incidents, the customer churn, the mounting tech debt from code nobody dares refactor because there are no tests to catch regressions.

Teams that test well ship faster because they can move with confidence. They refactor without fear. They onboard new developers who can make changes without breaking things they don’t understand.

Testing isn’t a tax on shipping. It’s what lets you ship with confidence, sustainably, for years.

References

- Boehm, B. W. (1981). Software Engineering Economics. Prentice-Hall, Inc.

- Nagappan, N., Maximilien, E. M., Bhat, T., & Williams, L. (2008). Realizing quality improvement through test driven development: results and experiences of four industrial teams. Empirical Software Engineering, 13(3), 289-302.

- Hilton, M., Tunnell, T., Huang, K., Marinov, D., & Dig, D. (2016). Usage, costs, and benefits of continuous integration in open-source projects. In Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering (pp. 426-437).